|

I am a graduate student at

My primary interests lie in embodied AI (robotics and 3D vision). I am a lifelong learner and I aim

to build things that work in the real world. I am always open to conversations. If you share similar

interests or simply want to chat, feel free to reach out!

Email / Twitter / WeChat / Google Scholar / GitHub / LinkedIn |

|

|

|

|

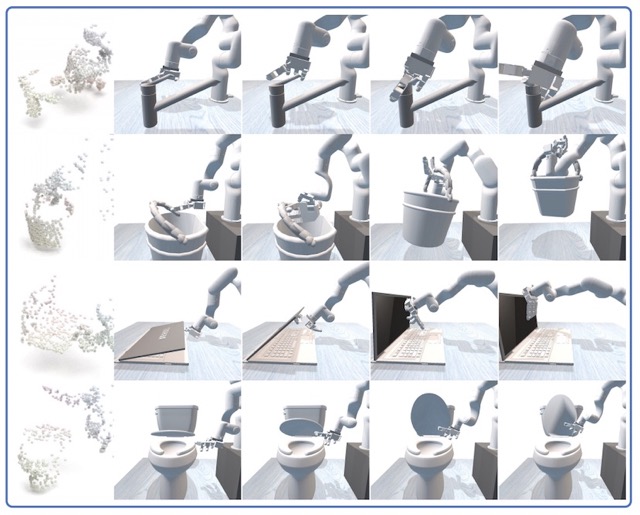

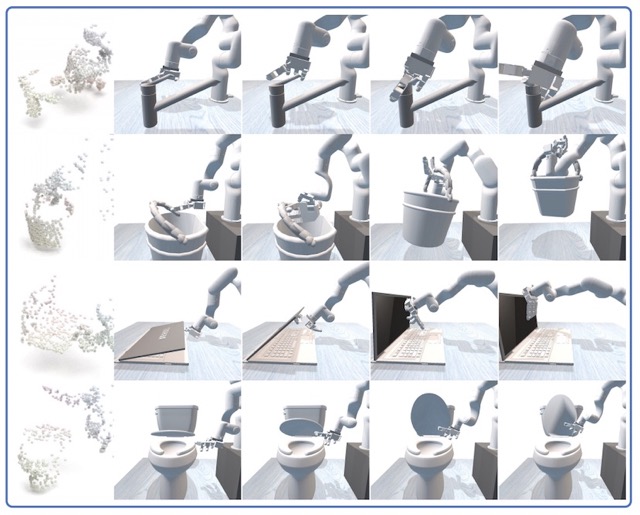

DexArt: Benchmarking Generalizable Dexterous Manipulation with Articulated Objects Chen Bao*, Helin Xu*, Yuzhe Qin, Xiaolong Wang† CVPR 2023 ArXiv / Project Page / Code We propose DexArt, a task suite of Dexterous manipulation with Articulated object using point cloud observation. We experiment with extensive benchmark methods that learn category-level manipulation policy on seen objects. We evaluate the policies’ generalizability on a collection of unseen objects, as well as their robustness to camera viewpoint change. |

|

GAPartNet: Cross-Category Domain-Generalizable Object Perception and Manipulation via Generalizable and Actionable Parts Haoran Geng*, Helin Xu*, Chengyang Zhao*, Chao Xu, Li Yi, Siyuan Huang, He Wang† CVPR 2023, Highlight (top 2.5% of all submissions) ArXiv / Project Page / Code / Dataset We propose to learn generalizable object perception and manipulation skills via Generalizable and Actionable Parts, and present GAPartNet, a large-scale interactive dataset with rich part annotations. |

|

Dora AI: Generating powerful websites, one prompt at a time Project done when I was a research engineer at Dora.run Ltd (startup). Project Page / Product Hunt / Awards / Twitter We launched Dora AI, the first end-to-end AI website builder on the market. It has become a successful AI product, winning the Best AI Products of the Year by Product Hunt, ranking ahead of Google Bard, Piki, etc. Dora AI can generate powerful websites from a simple prompt. It is designed to help users create websites quickly and easily, without any coding or design skills. |

|

2024.01 - Present Research Advisor: Prof. Hao Su |

|

2023.04 - 2023.10 Product Delivered: Dora AI |

|

2022.03 - 2023.04 Research Advisor: Prof. Xiaolong Wang |

|

2021.04 - 2022.03 Research Advisor: Prof. He Wang |

|

2020.06 - 2021.04 Research Advisor: Prof. Yebin Liu |